Closed-Loop Optimization in Laboratory Automation

Building systems that learn from experimental results and automatically improve processes—covering optimization algorithms, feedback architectures, and practical implementation patterns.

Type to search posts

No results found

Building systems that learn from experimental results and automatically improve processes—covering optimization algorithms, feedback architectures, and practical implementation patterns.

Designing AI agents that can understand, plan, and execute laboratory instrument operations—covering agent architectures, tool abstraction patterns, and safety boundaries.

Designing systems that convert natural language instructions into structured, validated laboratory protocols—covering representation formats, LLM pipelines, and safety verification.

A comprehensive look at where AI stands in lab automation today—the promising advances, the persistent challenges, and the gap between research demos and production-ready systems.

A practical guide to collecting, structuring, and leveraging data from distributed industrial systems—where each PC runs different environments and logs are your only starting point.

Exploring how LLMs are transforming laboratory automation—from interpreting human commands to orchestrating robotic workflows—and the practical considerations for deployment in air-gapped environments.

Exploring how VLMs could transform laboratory automation, and the practical constraints of deploying AI in air-gapped industrial environments.

A practical guide to deploying AI capabilities on air-gapped systems—from local inference engines to edge-optimized models and hybrid architectures.

Key ML-for-trading concepts from Georgia Tech OMSCS ML4T (CS 7646): portfolio theory, signals, risk, and evaluation.

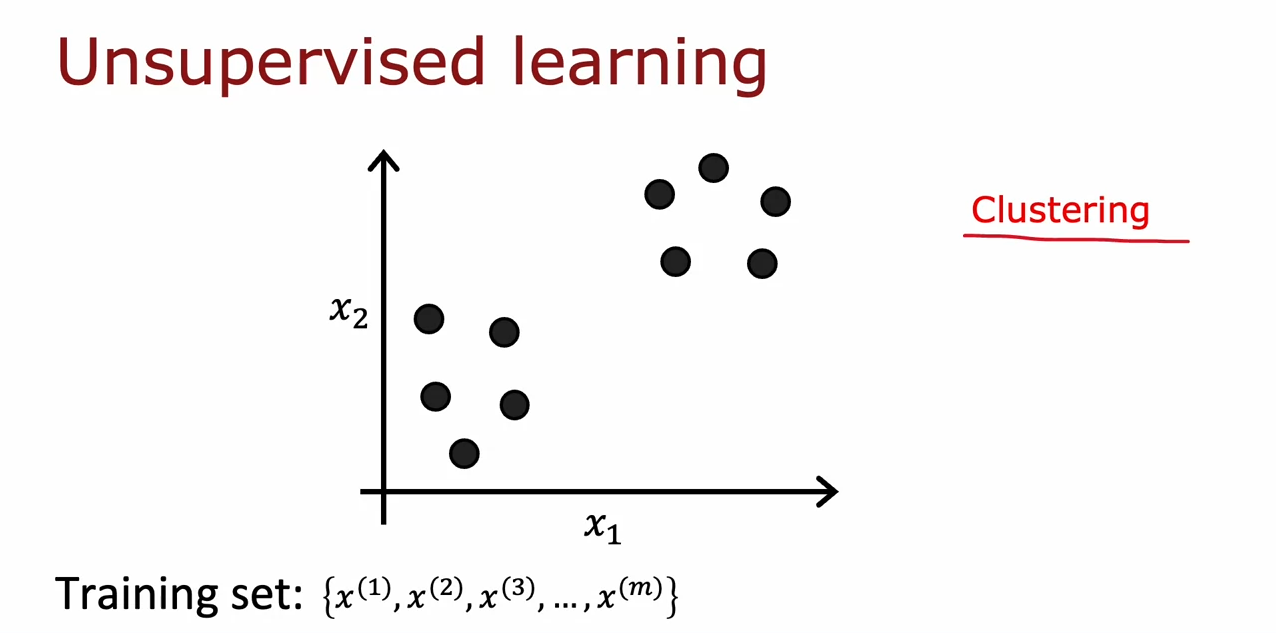

Explore core concepts of unsupervised learning, including K-means clustering, optimization strategies, and how anomaly detection systems are designed and evaluated.

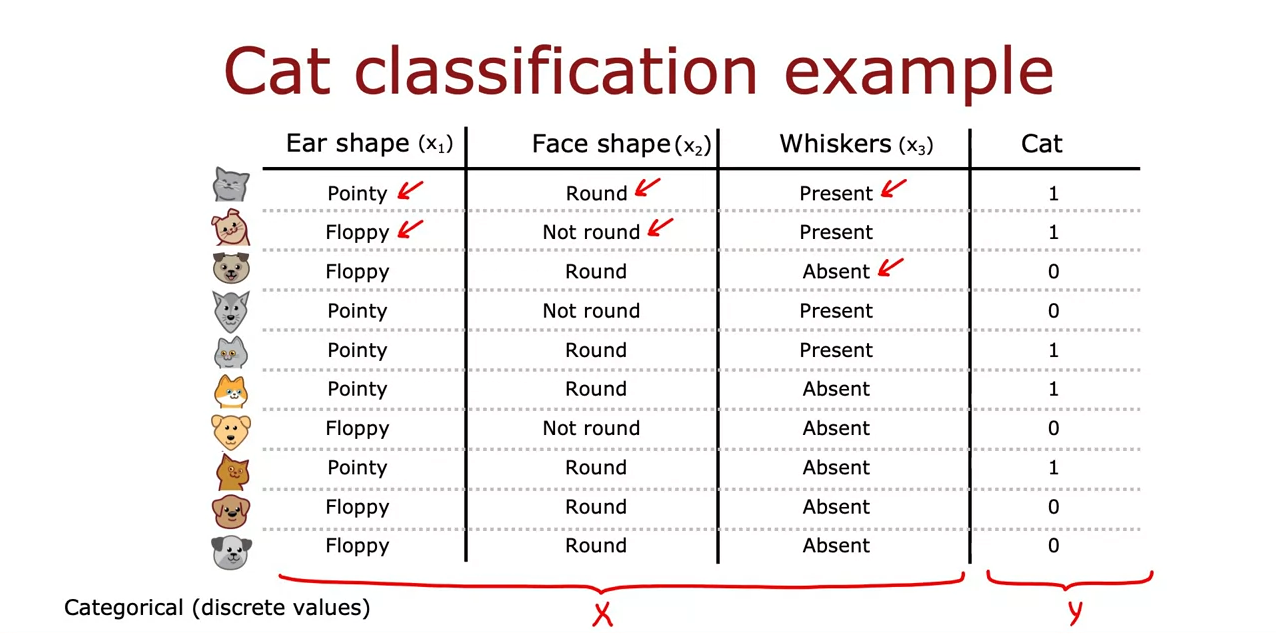

A complete guide to decision trees, covering entropy, information gain, one-hot encoding, regression trees, and ensemble methods like Random Forest and XGBoost.

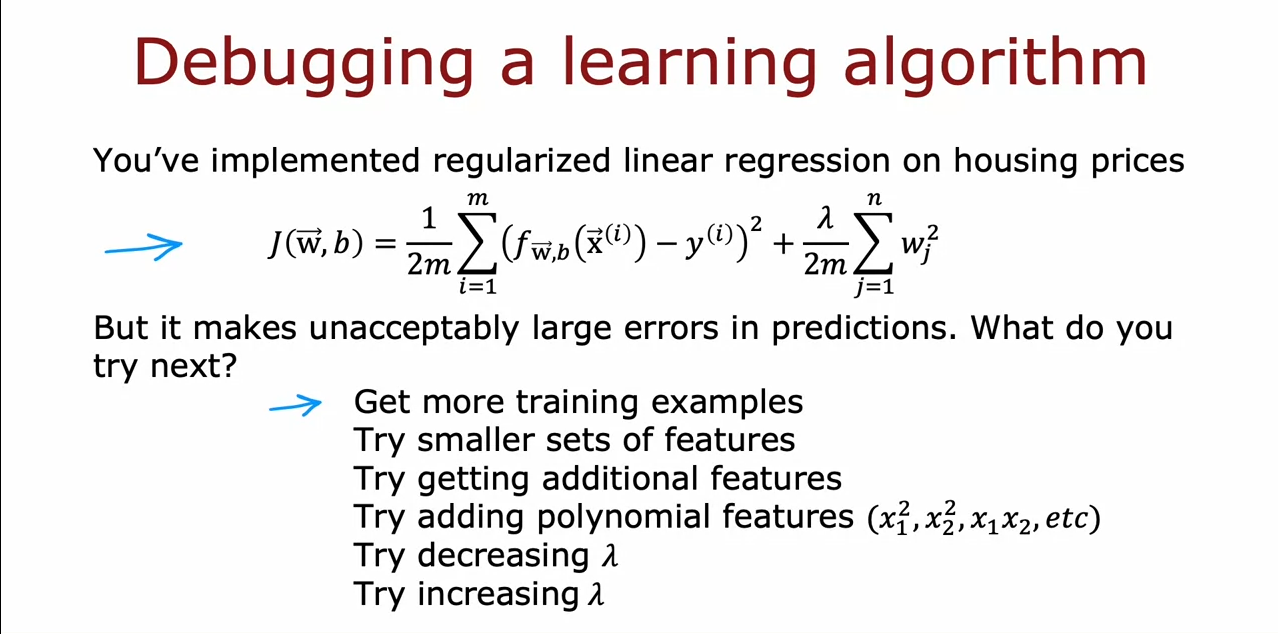

Learn how to make decisions, evaluate models, handle bias and variance, and manage real-world ML workflows with cross-validation, error analysis, and transfer learning.

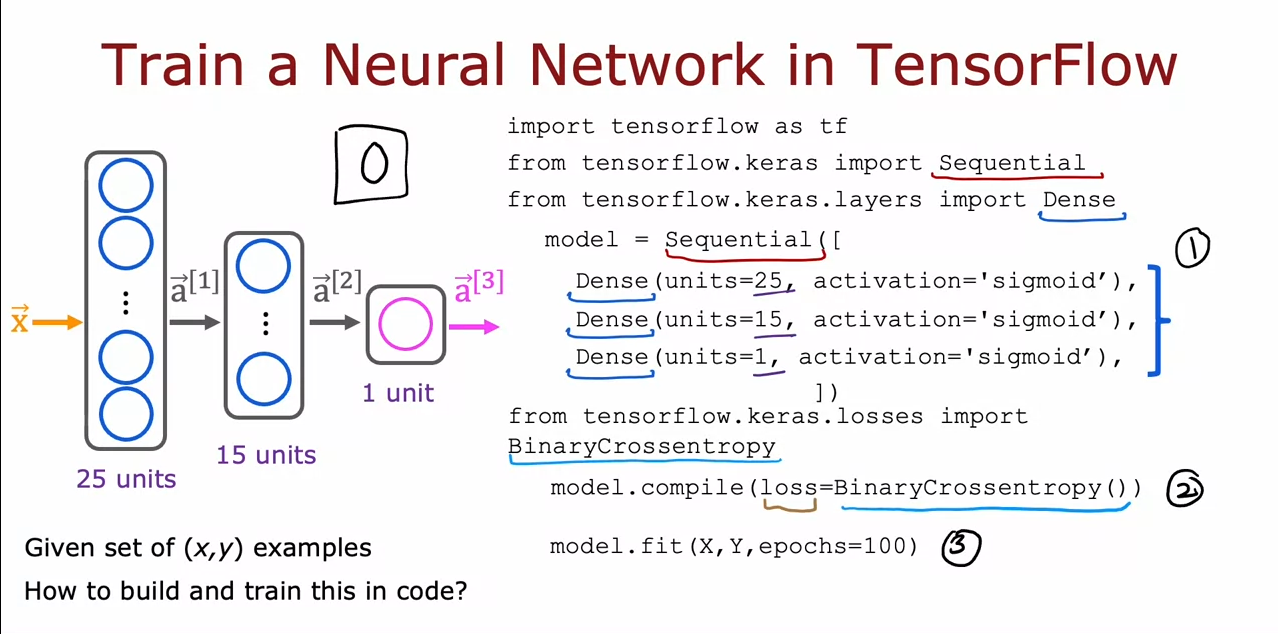

Explore how neural networks are trained with gradient descent, softmax, and backpropagation using TensorFlow. Understand activation functions and multiclass classification techniques.

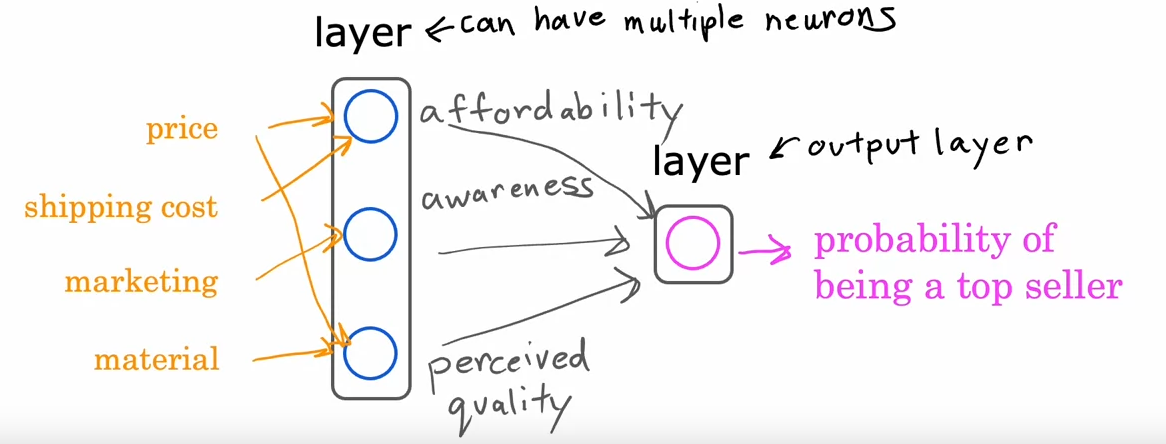

A comprehensive guide to neural networks, forward propagation, TensorFlow implementation, and efficient matrix computations.

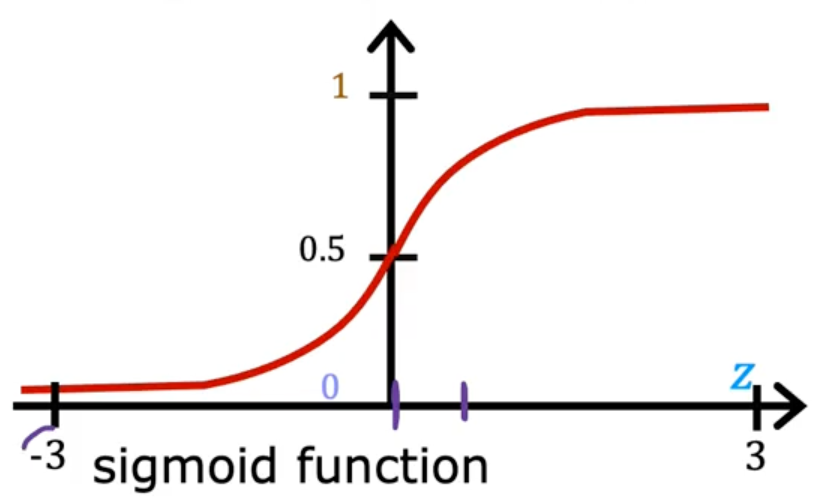

A comprehensive breakdown of logistic regression, sigmoid function, loss functions, and regularization for classification tasks.

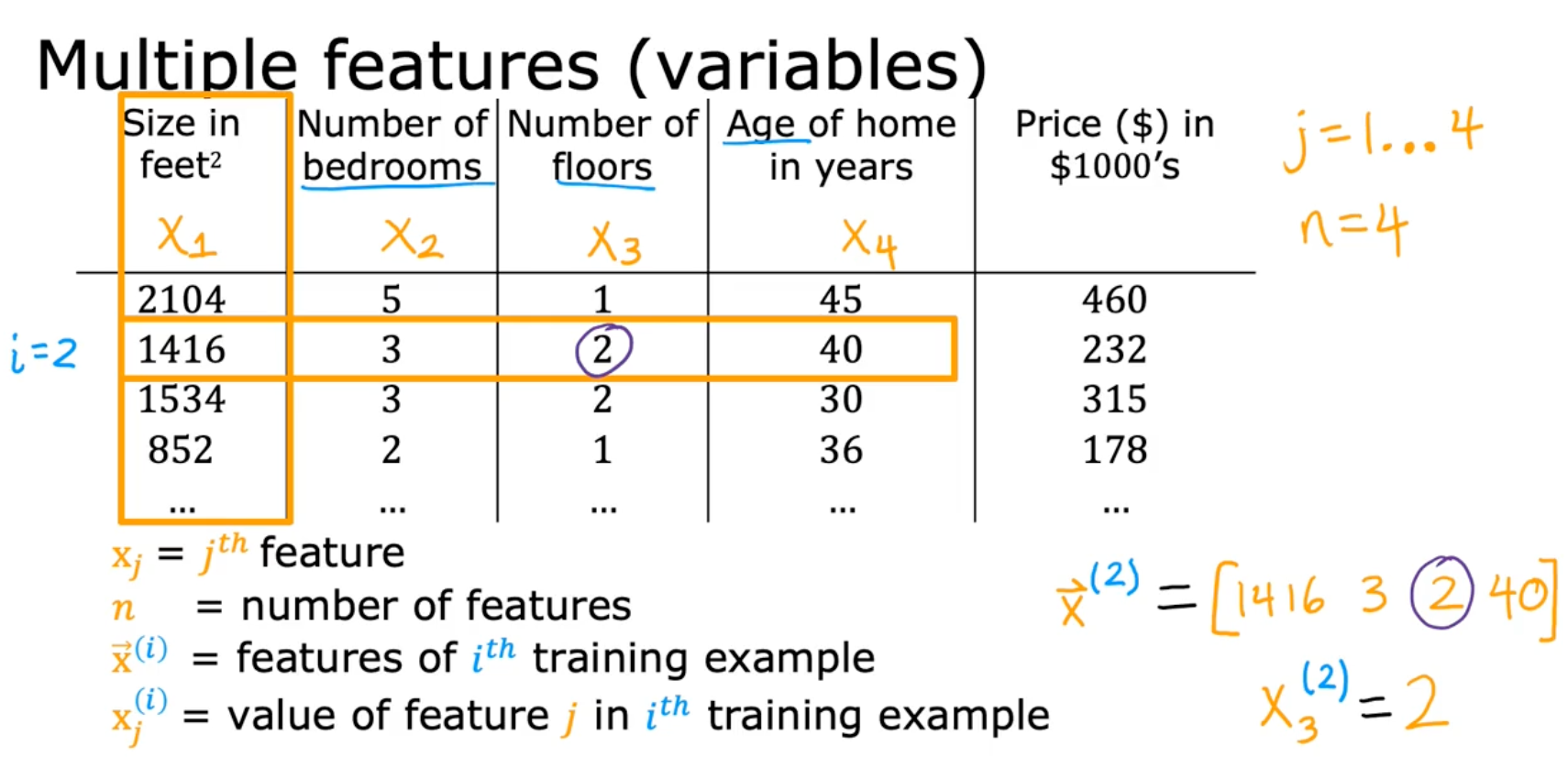

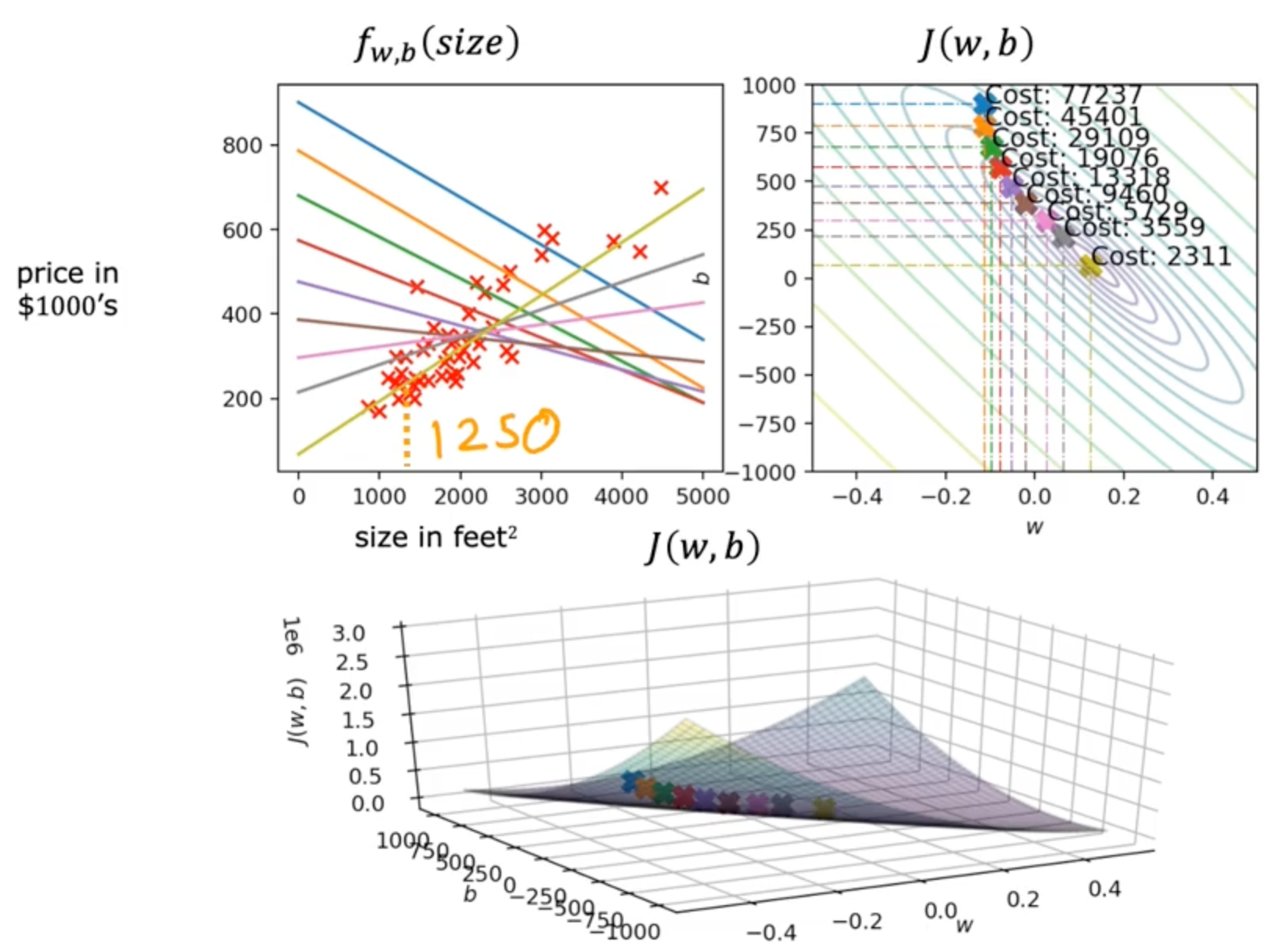

Deep dive into multiple linear regression, vectorization, gradient descent, feature scaling, and polynomial regression.

Overview of supervised and unsupervised learning, linear regression, and gradient descent